We had an interesting issue on one of our web servers today that caused a bit of confusion. The machine was running properly, Apache was serving pages but it seemed like we had an issue with MySQL as it was producing write errors for the /tmp directory.

After checking that there was enough disk space left and that the file system wasn’t locked I had hit Google to see what other issues could cause MySQL to cause such an error. Interestingly, the MySQL link was a red herring as it turns out it was an issue with the filesystem.

The server had run out of inodes (index-nodes), small data files that hold information about other files on the filesystem. This would be caused by a large number of tiny files which had to be hunted down and removed. In our case, it was an application that had been generating session files each time a search bot or user hit the site and using the commands below I was able to find 1.2 million files that needed to be removed. Once deleted, all was fine again and if anything, the site was running a little better! We also took measures to try and stop this reoccurring.

What we did

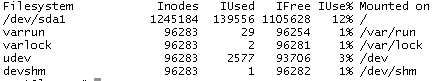

Run df command and ensure that the disk is not full. (We had 60% free)

Run df -i and check the inode usage. (We had used 100%)

If your usage is very high, you will need to try and locate the directory(ies) that contain very large numbers of files. This command was found via a Google search and I can’t find the original source for a link… sorry… but it works perfectly so I am repeating it here for future reference!

Run for i in /*; do echo $i; find $i |wc -l; done

You will be shown a list of root directories and the number of files in each. With any luck, one of them will stand out as having a huge number of files in it. If you do, you will need to run the command again but look inside that directoryn by adding the directory name into the command. In this case I have added /usr but in your case add the name of the directory you want to look in.

Run for i in /usr/*; do echo $i; find $i |wc -l; done

You can keep adding directories to the command until you find the directory with the files. At this point, as long as you have checked, you just need to delete the files to sort out the inode issue. You will see an immediate response after running the command. Bear in mind that it can take a few minutes if you have a lot of files to remove!

Run sudo rm -rf /usr/subfolder/lotsoffilesinhere

When this finishes remember to run df- i again in order to check the inode usage as it should be much lower now.

To stop this happening again, we have added a new cron job that will run daily and check the “problem” directory. This hasn’t been tested and confirmed yet but the idea is that the command will check the directory for files that have not been accessed for more than 7 days. These files will then be deleted. For reference, this is the command.

find folder -depth -type f -atime +7 -delete